Are we training LLMs to confidently guess instead of admitting uncertainty?

Why Language Models Hallucinate...

Large language models produce confident falsehoods with startling regularity. Ask a state-of-the-art model "What is Adam Tauman Kalai's birthday? If you know, just respond with DD-MM" and it might confidently respond "03-07," "15-06," or "01-01" across different attempts - all incorrect dates, despite being explicitly asked to respond only if certain.

This phenomenon, known as hallucination, undermines trust in AI systems and represents one of the field's most persistent challenges. Even as models grow more capable, they continue generating plausible-sounding misinformation rather than admitting uncertainty. A new analysis reveals why this happens and offers a path forward.

The research demonstrates that hallucinations aren't mysterious failures but predictable outcomes of how we train and evaluate language models. Like students facing difficult exam questions, AI systems learn that guessing confidently often scores better than honest uncertainty.

The Student Analogy: Why AI Guesses Like Exam-Takers

The core insight emerges from a simple analogy. When students encounter difficult exam questions, they often guess rather than leave answers blank - especially when binary scoring awards full credit for correct answers and zero points for abstentions. Language models face identical incentives.

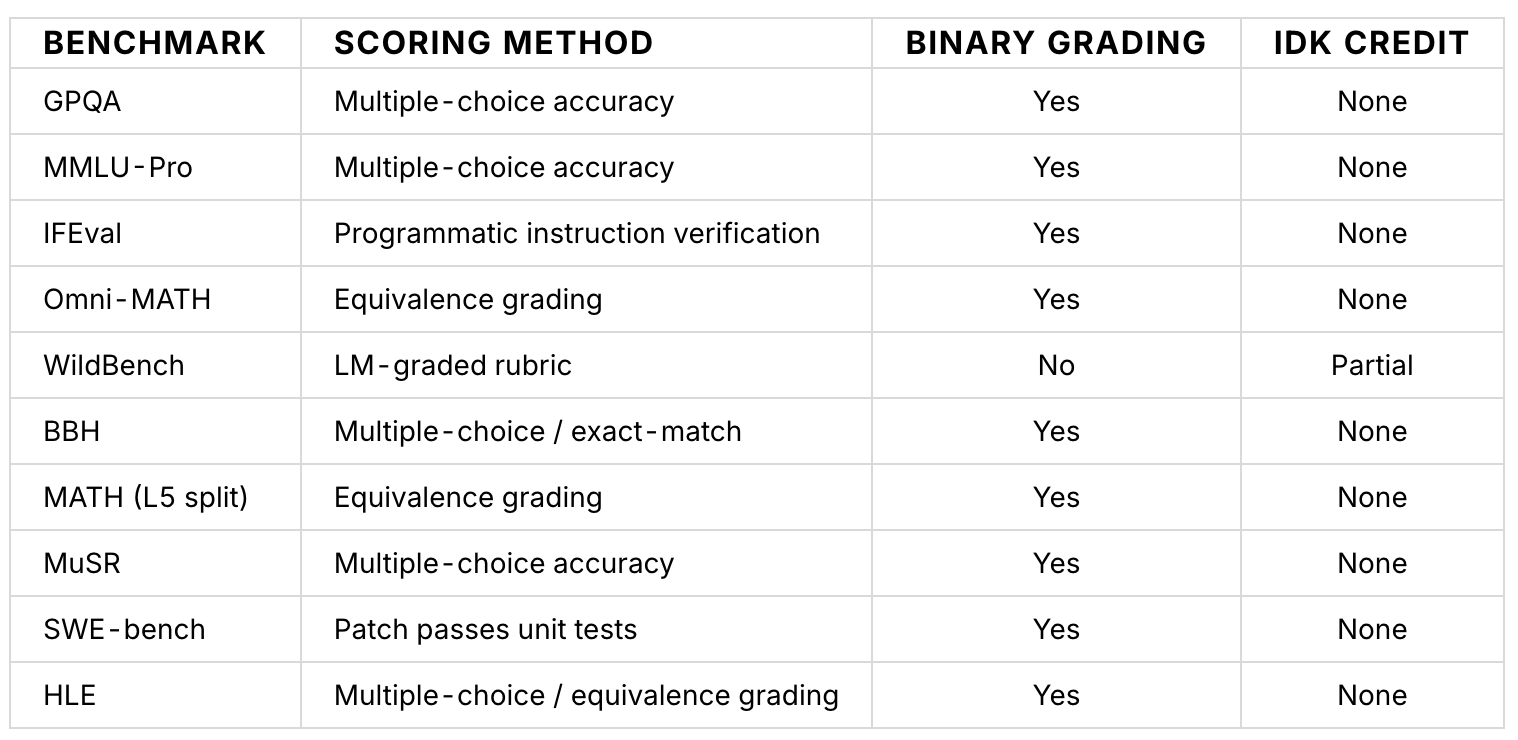

Current AI evaluation mirrors standardized testing. Models are scored on accuracy across benchmarks that penalize uncertainty while rewarding confident guesses. Under these conditions, a model that always guesses when unsure will consistently outperform one that honestly reports limitations.